Before reading this article, please check out our techniblogic post regarding megapixels in camera i.e. ” Things to know about Smartphone Camera: Megapixels “

Now you know that megapixels don’t matter at all in smartphone. camera sensor plays a large role in amplifying that raw picture. So, what is a sensor?

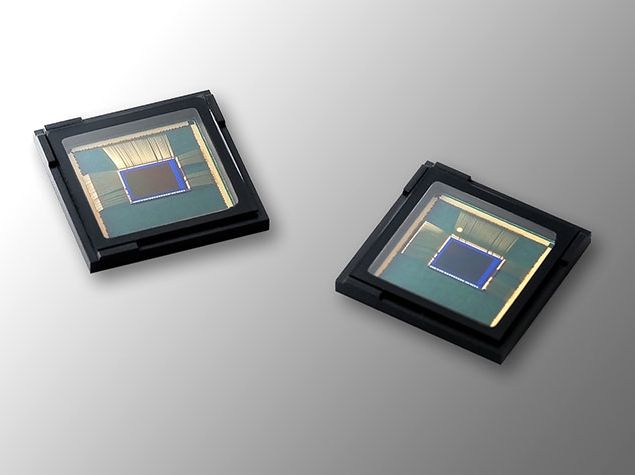

An image sensor or imaging sensor is a sensor that detects and conveys the information that constitutes an image. It does so by converting the variable attenuation of waves into signals, the small bursts of current that convey the information. The waves can be light or other electromagnetic radiation. Image sensors are used in electronic imaging devices of both analog and digital types.

A camera uses an array of millions of tiny light cavities or “photosites” to record an image. When you press your camera’s shutter button and the exposure begins, each of these is uncovered to collect and store photons. Once the exposure finishes, the camera closes each of these photosites, and then tries to assess how many photons fell into each. The relative quantity of photons in each cavity are then sorted into various intensity levels, whose precision is determined by bit depth (0 – 255 for an 8-bit image).

Cavity Array:

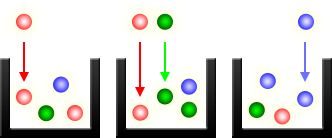

Light cavity :

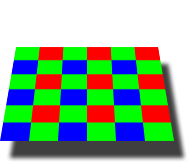

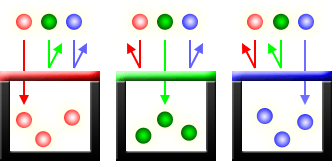

However, the above illustration would only create grayscale images. To capture colour images, a filter has to be placed over each cavity that permits only particular colours of light. Virtually all current cameras can only capture one of three primary colours in each cavity, and so they discard roughly 2/3 of the incoming light. As a result, the camera has to approximate the other two primary colours in order to have full colour at every pixel.

Color Filter Array:

Photosites with Color Filters:

The megapixel marketing has treated camera manufacturers well over the years. So, they generally increase the megapixels which in terms are sometimes meaningless. But consumers are getting smart to it. We’ve all seen dodgy images from higher megapixel cameras and know that after a point, megapixels don’t matter for most people. A 16 MP smartphone camera isn’t ever going to be as good as a 12 MP Full Frame DSLR. Why?

Sensor size

The size of sensor that a camera has, ultimately determines how much light it uses to create an image. In very simple terms, a bigger sensor can give more detail that a smaller sensor.

For example, if you had a compact camera with a typically small image sensor, its photosites would be dwarfed by those of a DSLR with the same number of megapixels, but a much bigger sensor will able to gain more information. The large DSLR photosites would be capable of turning out photos with better dynamic range, less noise and improved low light performance than its smaller-sensor sibling.

Types of Sensor

Now, there are not just one particular sensor. There are many kinds of sensor, good, bad, large, small. Currently most of the manufacturing company are using BSI-CMOS, PDAF sensor, ISOCELL, Stacked CMOS, RGBC filter and many more.

All of these terms refer to the construction of the sensor, the sensitivity of each pixel, and the susceptibility to noise. BSI stands for backside illumination, and is a method of producing a camera sensor where the photodetectors are layered above the transistors and other components. It’s a more complex method of CMOS production, but it reduces reflectivity, which in turn improves the light capturing ability of the sensor. BSI sensors are found in nearly all high-end smartphones.

ISOCELL is Samsung-specific technology for their BSI sensors, which places barriers between each photodetector to reduce crosstalk, improving sharpness and colour accuracy, especially in low-light situations. Crosstalk is where photoelectrons bleed between the pixels, causing bloom and halo effects in certain conditions, so reducing these effects is important in producing clean images. Stacked CMOS technology is found in Sony sensors, again improving the ability to capture light by pushing some parts of the circuitry below the pixel array. It’s found in Exmor RS sensors alongside BSI technology.

The RGBC filter made some noise at the time of the Moto X, and is found in some of OmniVision’s high-end sensors. Instead of a Bayer RGBG filter, OmniVision adds in a clear pixel to the filter, which improves low light performance by passing through full brightness information to the processing hardware. The camera then translates this into Bayer photos for the SoC’s image signal processor.

Hello there, just became aware of your blog through Google, and found that it is truly informative.

I will appreciate if you continue this in future.

A lot of people will be benefited from your writing.

Cheers!